After thinking about time handling for a while (I wrote a book about it called PostgreSQL: Time and Temporal Data), I like to break down time handling into four different levels ordered by complexity and the likelihood that you’ll encounter these issues:

- Date and time storage and time based calculations.

- Time zones, DST and related calculations.

- Storing records about things that are in effect for a period of time (like pricing, employment, contracts and many, many other things).

- Keeping track of the history of changes to data across multiple tables.

However, the unifying characteristic of time at any level, it seems, is that it’s always more complicated than you might expect :)

Level 1: Date and time storage and time based calculations

Even at this level, time is not straightforward to deal with. Even though I’ve been working a lot with time series for the last decade or so, handling time never got easy. There’s always one more thing that trips you up. For example, this simple query doesn’t do what you’d intuitively expect it to do:

select *

from users

where now() - created_at < interval '1 year';

Can you spot the problem? Actually, this query isn’t going to return any users created more than 360 days ago! (There’s a good reason for this once you understand how intervals work in Postgres.)

Here is another subtlety: I noticed that many developers think that now() returns current time - but that’s not correct! (It returns the timestamp at the start of the current transaction.)

Handling dates and times is tricky because of the complicated motion of our planet combined with the sometimes haphazard superstructure of different calendars, time zones, daylight saving time and various conventions for slicing and dicing time periods. There’s a long list of things that may not be quite what you expected.

Level 2: Time zones, DST and related calculations

Then comes a day when your company gets a customer in another country, or your software has to present project data to users in different parts of the world, or you have to keep track of future events for globetrotting users of your applications. This is where time zone support comes into the picture.

As Patrick McKenzie put it:

“If you’ve ever wanted to play What Fresh Hell Is This for a few years, I recommend building any product with times seen by 2+ people each.”

Time zones can be a source of countless bugs, partially because a lot of people “misunderestimate” them. I’ve seen that some developers think that a time zone is just a constant offset, or that the timestamp with time zone type actually stores the time zone, or that countries change their time zone during daylight saving time.

Your queries start getting peppered with fun bits like this:

select timestamp '2017-08-13 00:00:00' at time zone 'Australia/Sydney' at time zone 'UTC';

Even this single line presents a lot of questions. Why is at time zone used twice? Are we converting from Australian time to UTC or vice versa? Why do we need UTC anyway?

Many developers find time zones to be a pain, and after working with them they either want to run away screaming or they write a manifesto about abolishing time zones (which wouldn’t make things any easier, by the way).

Level 3: Keeping track of data validity periods

Once you get adept at wrestling time zone code, you’re on level two, but it turns out that you’re still only at base camp and the peak of this learning curve is somewhere above the clouds, because there are at least two more levels of complexity awaiting you. The third and fourth level are about working with what’s called temporal data.

Level 3 is concerned with storing records about things that are in effect for a period of time (like pricing, employment, contracts and many, many other things).

Fortunately, there’s been research in this area going back many decades, and there’s a book by Richard Snodgrass called “Developing Time-Oriented Databases” that goes in depth on the techniques for working with temporal data.

Unfortunately, the book was published in 1999, when Postgres was still in its infancy, so the examples are in a prehistoric dialect of SQL and instead of Postgres we get examples for DB2 and Oracle 8.

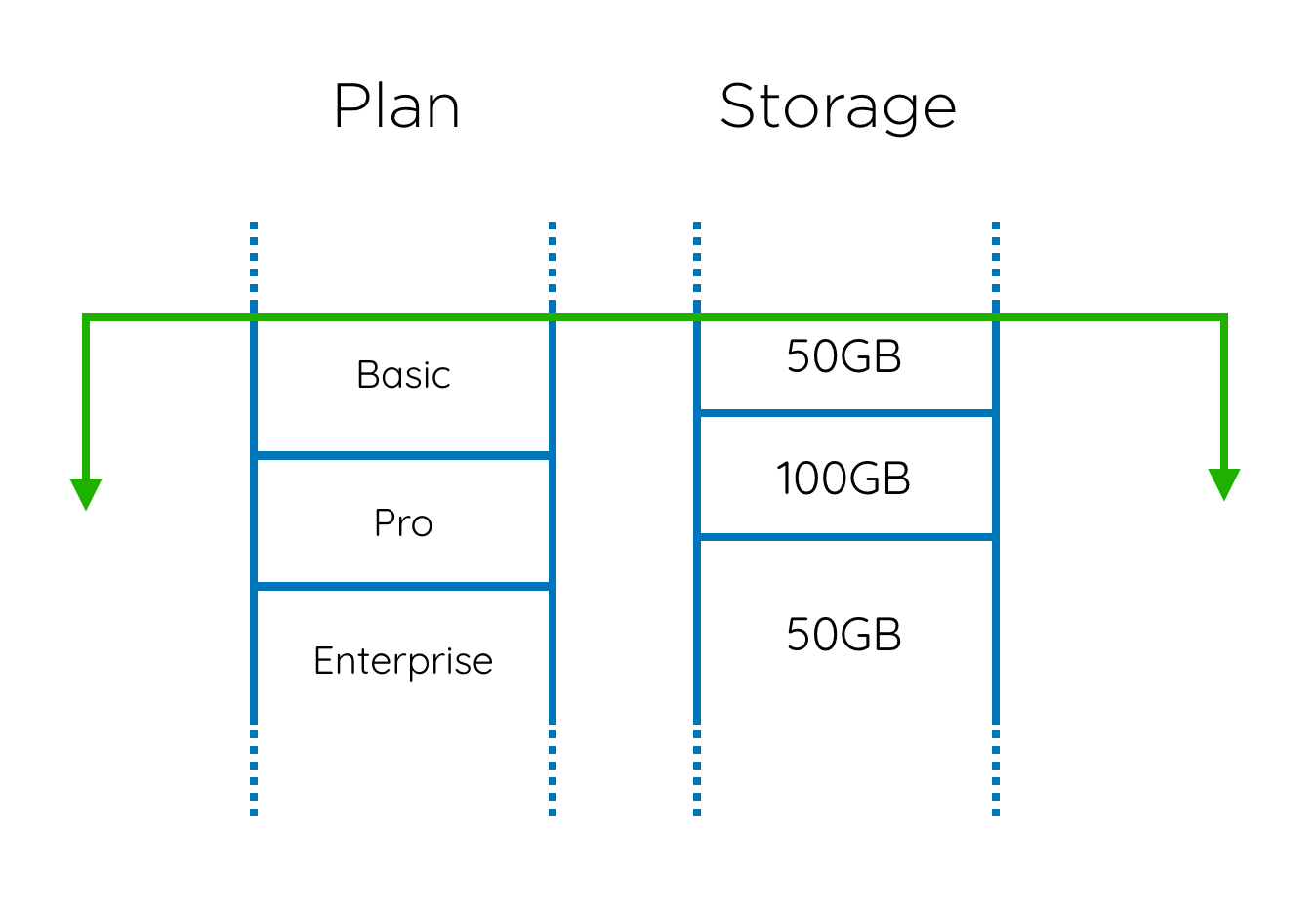

To give you an idea, consider this example. Suppose you provide software as a service, so you are storing information about the customers’ plans. Your service also allows customers to buy extra storage, with the pricing linked to the plan they’re on.

So, to find out the price at a particular point in time, it would be a simple join:

select plan, storage_size

from cust_plans

join cust_storage using (cust_id)

where cust_id = $1

Now imagine that you would like to find out how much a customer has paid you in the last year (a totally reasonable, basic kind of request). In order to allow for this question to be asked, you need to store the history for each customer, something like this (the green line shows the start of the period in question):

So, the customer stays on a plan for a period of time and then upgrades or downgrades. They also upgrade and downgrade the extra storage, and the overlap gives you 5 periods with different pricing which need to be summed up.

Suddenly, we’re not in Kansas anymore, and we can’t just do a join to get this result. We need to calculate these 5 periods based on the records in both tables.

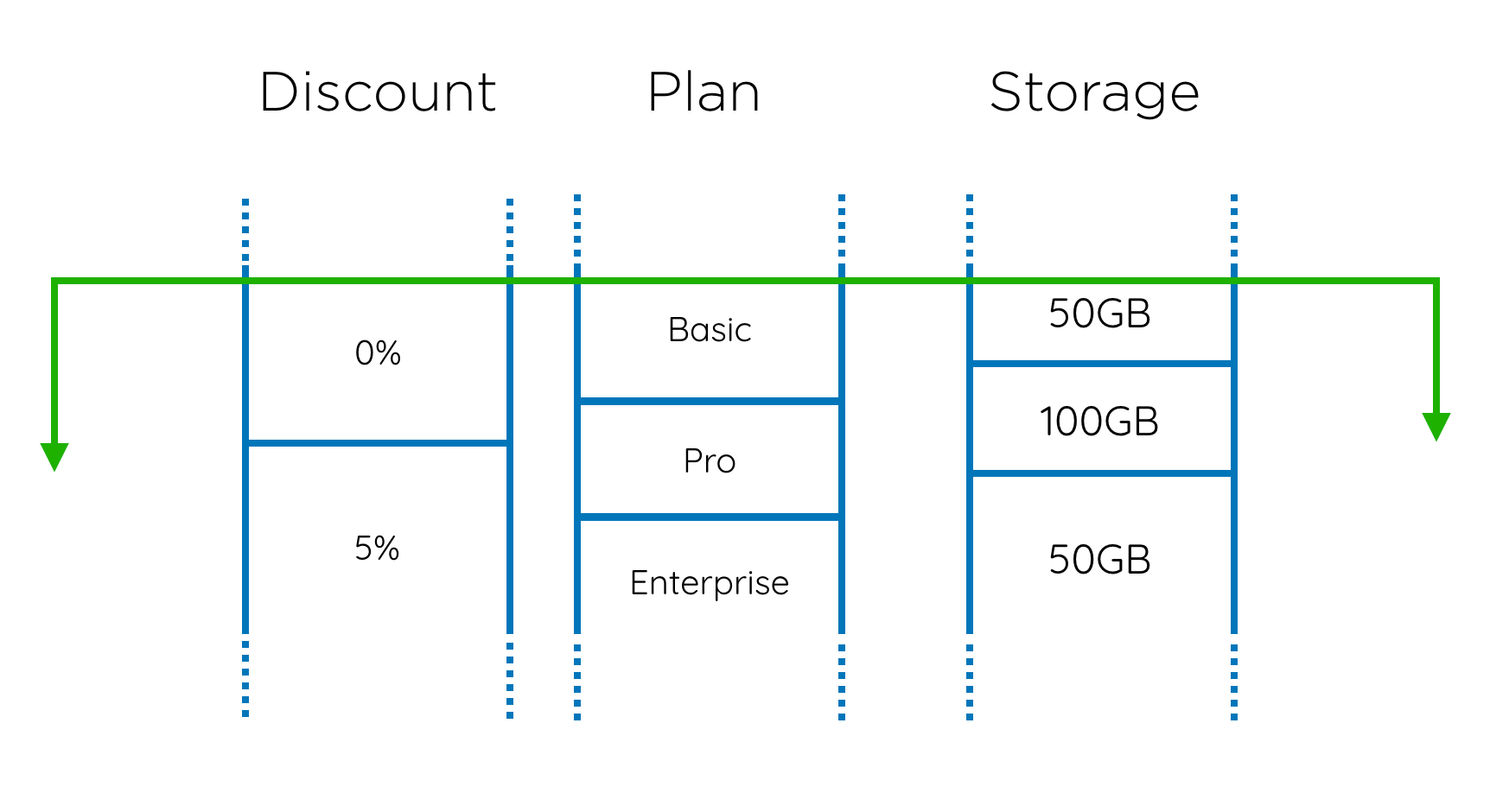

This isn’t all! What if we can also apply discounts for a period of time? It could look something like this:

Obviously, this is going to result in much more complex queries.

This kind of problem can be managed by adding “valid time” to your period. In terms of schema changes, it just means adding a start and end time to the table, either as a pair of columns or as a single time range column (daterange, tsrange or tstzrange in PostgreSQL). The bulk of what you need to know is the techniques for working with valid time - querying the data, joining temporal tables, performing modifications, enforcing constraints etc.

Level 4: Recording history

Level 4 is concerned with keeping track of the history of changes to data across multiple tables.

Continuing with the example of pricing plans and discounts, since human operators set the discount, it’s possible that they make a mistake, so we also need the ability to reverse or amend the discounts. But, for billing and audit purposes, we need to retain all of the history of discounts, including incorrect discounts and the periods that they applied for. Imagine that you put a discount in place, the invoice went out to a customer, and then you realise the rate was wrong and you correct it - the next invoice needs to include a corresponding adjustment. Start thinking about the queries this obscure little procedure requires, and you should get a healthy feeling of dread.

There are multiple approaches to dealing with history. You could log each version of the row before it changes, or you could keep a log of the operations (inserts, updates, deletes) applied to it. You could store the historical data together with the current data, or you could have a separate history table. Finally, you could add the so called “transaction time” to your table, which records the lifetime of a row. Transaction time is quite similar to valid time (identical in terms of schema changes), but it does have different semantics. For example, a transaction period implies that rows with a period that ends in the past cannot be altered. A transaction period also cannot end in the future (it either ends in the past or hasn’t ended yet).

Level 4 is complicated by the fact that you might have two different time dimensions in a single table, i.e. both valid time and transaction time. Luckily, these dimensions can be considered orthogonal, but you may still encounter the need to query across dimensions (for example, joining a row that’s valid over a particular period with multiple versions of a row in another table).

SQL support

Until SQL:2011, SQL only dealt with levels 1 and 2 to some extent. SQL:2011 introduced a number of temporal features, effectively adding support for both valid time and transaction time (SQL:2011 refers to valid time as application time, and transaction time as system time). I believe this support is partial, i.e. not every kind of temporal query you might want is supported with the new syntax, and there will still be hand-crafted SQL involved.

As of PostgreSQL 10, these features aren’t implemented, so you have to rely on either extensions (like temporal_tables) or just plain SQL.

Comments or questions? I’m @alexkorban on Twitter.

“PostgreSQL: Time and Temporal Data” is a short book that will help you get it under control. Master Postgres time tools, get a handle on time zones, learn how to represent and query complex temporal data.

Pop in your email to receive a sample chapter.

You will also get notifications of new posts along with other mailing list only freebies.