In software development these days, we try to build a blameless work culture which admits the possibility of mistakes and treats them as opportunities to do better in the future. The blameless approach is very useful in that it allows people to spend less time worrying about negative consequences of possible mistakes (and most likely dedicating effort to evading possible blame). It also shifts focus onto system improvements which is a far more productive course of action in most circumstances.

So, for example, in production incident post-mortems, the focus is entirely on how we could improve systems and processes to prevent a recurrence of similar incidents in the future, and no time is spent figuring out who was at fault.

There was something missing for me in the process though. We’d talk about a bunch of improvements, from little things like documentation to heavy duty testing systems, and then we’d select a subset that would be easy enough to implement, and move on. It felt kind of arbitrary at times.

Besides, if we take this idea of “no blame” to the extreme, then no matter how egregious and preventable the mistakes, no individual has to take responsibility for their actions because “we should have had better systems”. That seems like a copout.

A few days ago I came across a comment which got me thinking whether individual responsibility still has a place:

When you treat every negative outcome as a system failure, the answer is more systems. This is the cost of a blameless culture. There are places where that’s the right answer, especially where a skilled operator is required to operate in an environment beyond their control and deal with emergent problems in short order. Aviation, surgery. Different situations where the cost of failure is lower can afford to operate without the cost of bureaucratic compliance, but often they don’t even nudge the slider towards personal responsibility and it stays at “fully blameless.”

This is very interesting! It points out is that there is actually a spectrum here: on one end, we have complete individual responsibility and zero support from systems and processes. On the other end, we have some kind of hypothetical system capable of preventing all possible types of errors. Imagine all sorts of formal verification, layers of reviews, a profusion of all sorts of automated tests and checks and so on.

The idea is that the human factor is eliminated whenever possible (note that even with formal verification, it isn’t really possible to remove the human factor entirely as someone has to feed in the requirements and assumptions at the end of the day).

Thinking about it this way makes it quite obvious that there is a cost aspect to moving along this spectrum, just as there is a cost associated with possible mistakes.

When just starting to move away from total reliance on individual responsibility, there is a high payoff in introducing systems and processes. But it has to taper off as all of the highest impact failures are mitigated. At some point, it’s no longer effective to keep introducing formalised or automated checks, and individual responsibility is back on the table (with the full understanding that mistakes will sometimes occur and aren’t going to lead to finger pointing).

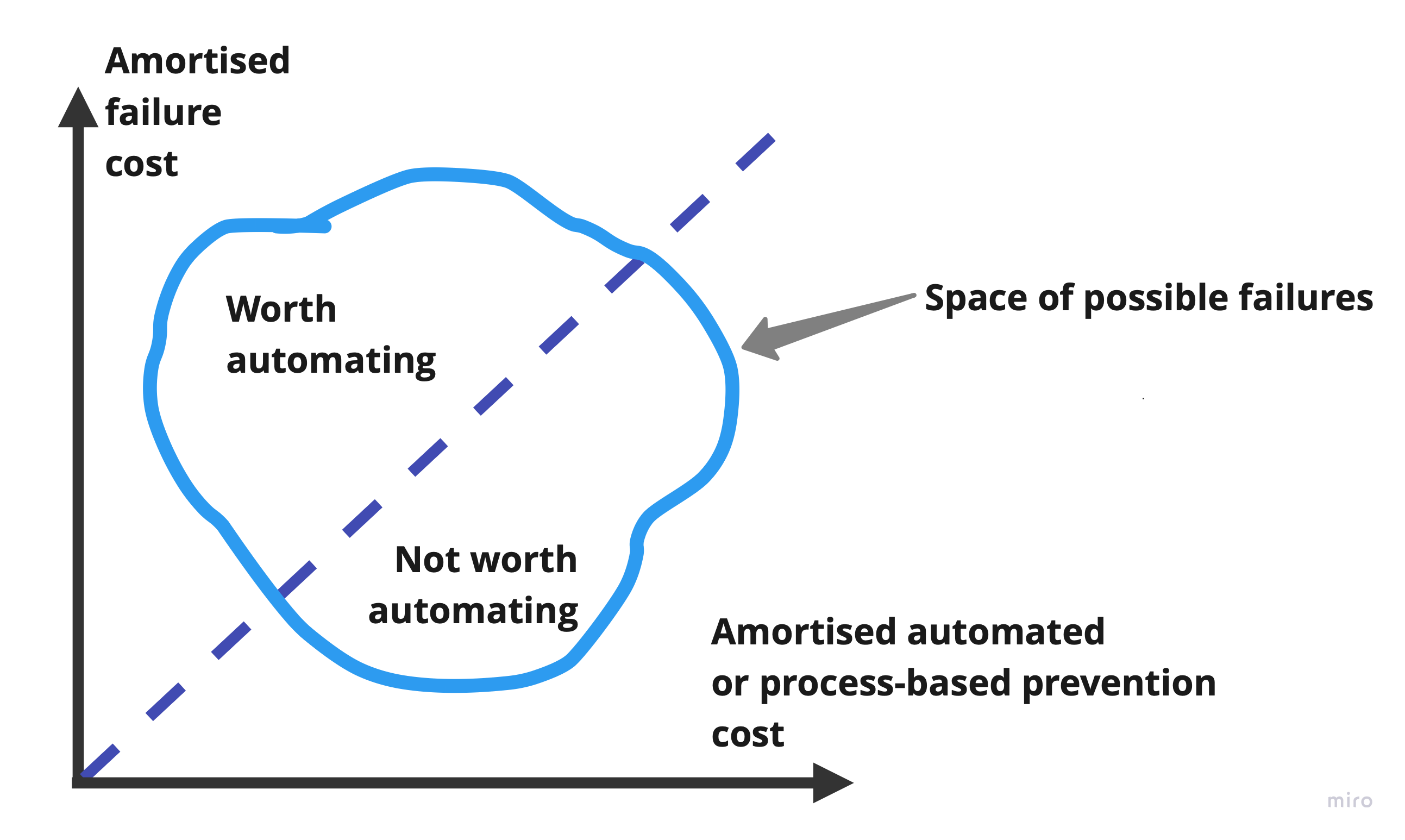

In other words, there is a tradeoff between the impact of possible mistakes going uncaught and the cost of putting in place systems and processes to prevent them:

Naturally, the cost of failure depends on the industry. Aerospace and health are examples of industries where it’s worth going to great lengths in systematic mitigation as the costs of failures are very high (and involve human lives at the top end). On the other hand, for a run of the mill internal app used by a handful of people, it may only be worth doing the basics.

So with all of this in mind, we now have a rubric to assess proposals for failure prevention. The cost of implementation and operation of a proposal, amortised over a given period, needs to be compared with the estimated amortised cost of uncaught failures (taking into consideration frequency, severity etc.) over the same period.

This approach also clarifies the role of the individual: personal responsibility is our fallback in those areas where we’ve intentionally chosen to avoid introducing failure prevention mechanisms because of cost considerations.